Operational Machine Learning Challenges at Scale

The Joint Force is attempting to define the requirements and capabilities of future-state C2 and decision support systems. Commercial industry and technology firms that support mission delivery are learning some important lessons about the processes, technology, and most importantly, the people necessary to develop and maintain AI/ML-enabled complex environments. The Joint All-Domain Command and Control (JADC2) concept acknowledges the nature of this challenge, and Joint leaders are working to integrate communication infrastructures like 5G technology and break down data silos through efforts such as the Army’s Project Convergence and the Air Force’s Advanced Battle Management System (ABMS).

These systems will comprise a complex ecosystem of sensors, connected to targeting and decision nodes, and linked to echeloned units and their associated kinetic and non-kinetic engagement platforms. Joint All-Domain doctrine will likely dictate that this complex ecosystem assists commanders at all echelons to execute in a highly dynamic and decentralized environment at an extremely aggressive pace across intertwined physical, cyber, information, and cognitive terrain. The Joint Force is posturing its communications systems, its C2 processes, and its platforms to begin forming the critical physical and data backbone to support next-generation command in an All-Domain environment.

Adding Artificial Intelligence and Machine Learning (AI/ML) to this advanced C2 capability will require more than addressing the challenging task of providing data flow and interoperability between advanced sensors, weapons, and communications platforms. Large-scale production ML systems are more than just a collection of models. If the Joint Force wants to replicate a platform like Uber, it must also address the software and data engineering challenges extant to those capabilities. Commercial companies such as Uber, Facebook, and Google, which deal with vast amounts of data, have been dealing with the problem of scalable and extensible ML environments for at least a decade or more. Each ML model, once fit with data, is no longer a singular algorithm but a snapshot in time. Without the proper architecture for re-training and tuning, the model cannot be refreshed and will slowly (or perhaps quickly) drift and become detrimental to mission success. For peak performance, models must be placed into a purpose-built, analytics-enabled ecosystem to prevent model drift, support their functionality, interoperability, and maintenance.

To support an interdependent ecosystem of models, ML pipelines need to be containerized, portable, and support a wide variety of commercial-off-the-shelf (COTS), government-off-the-shelf (GOTS), free and open-source software (FOSS), and custom mission-specific model architectures and ML frameworks. The complexity of these types of software and data ecosystems does not appear to scale linearly. More interdependent models fed by increasingly complex and large data streams create exponential demand for model maintenance, model storage, model portability, and compute resources.

“We have found that ML at scale of the complexity needed to deliver JADC2 requires: (1) adaptive, highly skilled MLOps Teams, (2) a focus on mission-appropriate, extensible, platform-agnostic solutions, (3) operating within a purpose-built, AI-enabled ecosystem.”

Processes and Technology: AI/ML Ecosystems

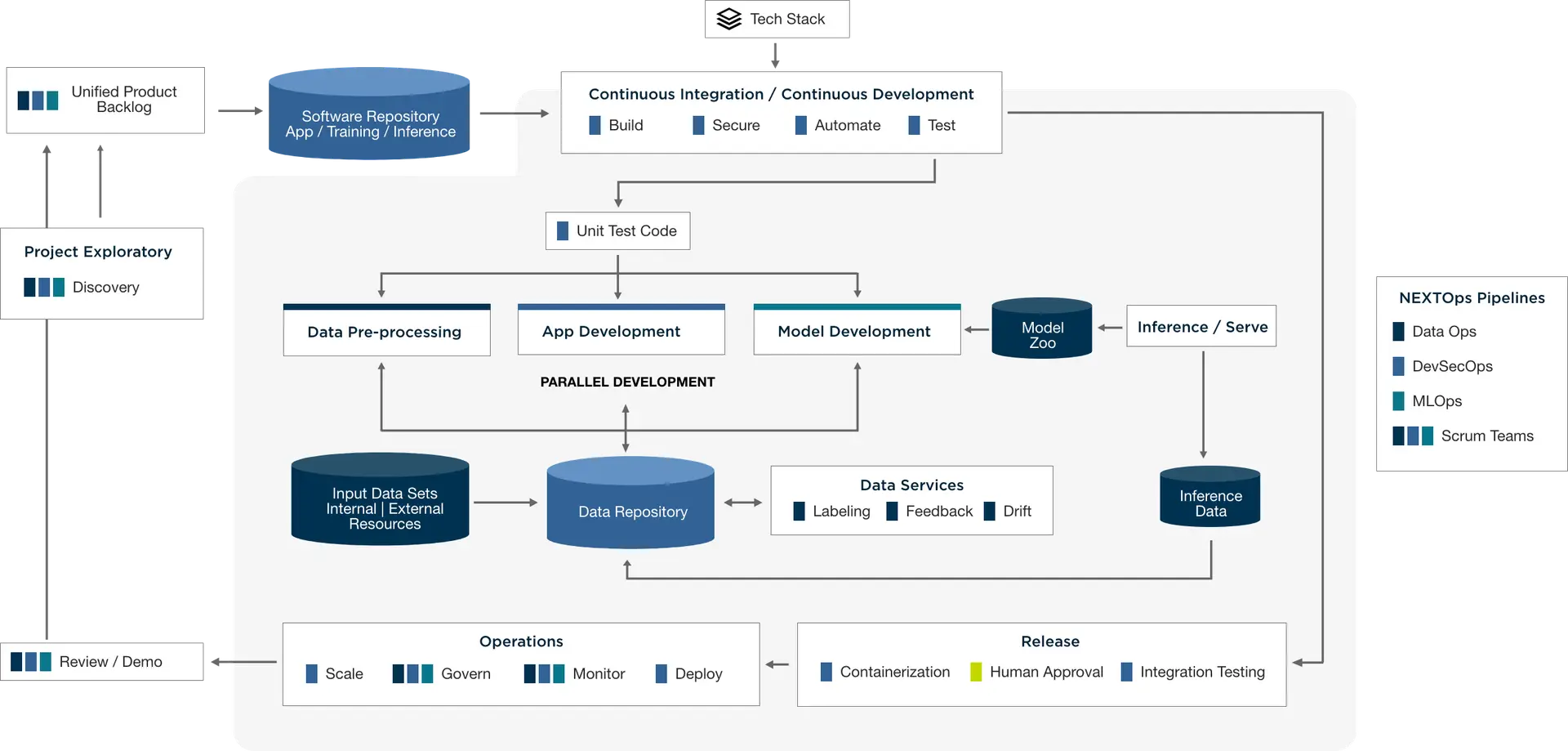

Many of the basic tenants of large-scale production AI/ML-enabling ecosystems are conceptually easy to understand. They consist of data ingest and ETL pipelines, data lakes and other mechanisms for storage, ML training and validation pipelines, and ML inference pipelines. Building out the data architecture, data cleansing, wrangling, standardization, and data interpretation at scale are well-known and daunting obstacles to deliver an Uber-like JADC2 capability. What are less well-defined but no less critical to the success of an AI/ML-enabled JADC2 capability are the processes and platforms required to maintain and inter-connect hundreds if not thousands of separate ML models into a single, coherent domain-awareness and decision-support architecture.

Until recently, end-to-end ML solutions typically comprised only the model training process, leaving model deployment, serving, and inference management to bespoke solutions or an ad hoc structure of different COTS and FOSS platforms. Commercial firms are developing ML as a Service and AI as a Service (MLaaS and AIaaS) on platforms such as Google’s TFX and Uber’s Michelangelo to fill this gap and facilitate delivery of their AI/ML-enabled products. For the Joint Force to field a future proof JADC2 capability, it will need to develop a semi-custom ecosystem and a series of associated standards that will quickly meet or exceed the current size, scale, and capability of these commercial solutions.

Further, this ecosystem will need to adapt to new technology, new model architectures, and new research. The Joint Force will need to establish a set of standards around AI/ML ecosystem engineering to allow for mission-specific customization and integration. Today’s AI/ML environment is a relatively extensible Python-based world. While libraries may extend functionality, there is no guarantee that one Python library will integrate with another. Development of an AI/ML-enabled JADC2 environment will require extensive engineering, integration, and customization regardless of what base MLaaS and AIaaS platforms are borrowed from current industry state-of-the-art. Carefully developed standards for model portability, interoperability, and maintenance will be necessary to enable innovation and customization while maintaining functionality and security.

People: MLOps and Cross-functional Teams

Once engineering and integration standards are defined, the most critical component to delivering an AI/ML-enabling ecosystem will be the highly specialized, agile software development teams capable of addressing these engineering challenges. Critical to Uber’s successful delivery has been its exploration of ML Operations (MLOps) as an enterprise operational delivery concept. MLOps Teams are an emerging delivery concept. They focus specifically on delivering ML solutions by combining appropriate software practices and infrastructure (DevSecOps) with the necessary data ingest and data engineering practices (DataOps), along with model training and experimentation (Data Science), and address inference delivery and model maintenance through a Continuous Improvement/Continuous Delivery (CI/CD) process.

AI/ML-enabling solutions are unique software solutions that require specialized cross-functional software delivery teams. Deployment and maintenance of these end-to-end solutions present several software and systems engineering challenges to commercial providers. Adding to the challenge, The Joint Force will need to tackle these challenges while protecting sensitive information, capabilities, and vulnerabilities while delivering solutions that meet mission requirements. This will dictate a cleared or partially cleared trusted engineering workforce that can use MLOps practices to engineer an AI/ML-enabled ecosystem that will address mission-specific needs that are not native to commercial applications.

What makes AI/ML-enabling solutions particularly challenging is the reality that production models are, in some ways, like living organisms. They require experimentation and adaptation to train and field, and specific workflows to manage inferences or ensemble results across several separate models. They also require significantly different approaches to maintenance to control model drift and identify bias, and standards adoption to allow for reliable, seamless extensibility. MLOps teams are unique in that they possess not only infrastructure, data engineering, and software development capabilities, but also the data science and ML engineering expertise necessary to adapt models, manage inference pipelines, and field them to meet highly complex mission-specific requirements.

For example, Joint Force operational users might want to run a single source of data through multiple models, enhancing the original data with model inference results. This request would include multiple computer vision models trained to detect separate classes on the same imagery, then presenting all detections across all models back to a user or downstream node when that imagery is viewed. While this sounds reasonable, it would require a significant series of engineering challenges and experimentation to train and adapt current computer vision models, along with a semi-custom inference management pipeline to deliver inference downstream for use. Maintenance of this family of models would require an engineering team capable of verifying and validating training and operational data to ensure that the models and inference pipelines continue to perform as designed as new data are introduced into the system and capable of incorporating novel preprocessing and added metadata as the sensor designers incorporate them.

Conclusion

As the Joint Force develops standards for the management of inference, model deployment, and model maintenance, government and industry support will be required to develop and maintain the AI/ML-enabling ecosystem that will allow JADC2 and similar systems to keep pace with associated changes in sensor, domain awareness, and engagement platform capabilities.

Fielding this system of systems will also require significant shifts in product management and systems engineering practices. AI/ML-enabled ecosystems generate data and model-driven continuous change and will demand new deployment and maintenance concepts. While commercial platform providers are working to solve many of the issues noted above, it is highly unlikely that COTS or FOSS tools will directly address unique Joint Force needs without some form of late-stage customization or the creation of mission-specific solutions. Instead, the Joint Force must leverage MLOps teams that possess the necessary cross-functional skillsets and agile practices.

References

1Joint All-Domain Command and Control: Background and Issues for Congress, July 8, 2021. Congressional Research Service. https://fas.org/sgp/crs/natsec/R46725.pdf