This article is part one in a series on data management focusing on cloud, hybrid cloud, and community cloud for our government customers working to derive more value at scale and increased speed from their data sources.

The data management challenge

The advent of cloud computing and access control technologies has disrupted data management design. This disruption happened primarily due to the rapid compute and storage scalability of cloud computing providers such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP).

As more organizations adopt cloud service providers, the data management challenge becomes more apparent. Many organizations store their data in departmental silos with relational databases, SQL server backends, network file shares, and application-specific data stores, each with unique schemas, file formats, and data descriptions (metadata). This decentralized practice creates data sprawl and generates departmental data that can only be shared via manual requests, requiring database administrators to follow manual workflows to develop custom data queries in rigid data structure query (SQL) data schemas.

These practices work fine for previously slow innovation horizons and high barriers to entry, such as provisioning physical data centers and large IT-centric teams. However, in the rapidly growing Everything-as-a-Service (XaaS) cloud ecosystems and marketplaces, these slow data provisioning cycle and lead times create weaknesses that fast-moving small teams can exploit and create asymmetric advantages in the intelligence and information domains. Established industry and government organizations have valuable historical data stored inside data centers and, in many cases, duplicative data that is not normalized or version controlled. Tapping into that data has many potential benefits:

- analyzing trend analysis for competitive advantage

- opportunities to develop efficiencies in existing processes & reduce expenses

- sharing interoperable data with authorized consumers to encourage innovation

Access to these benefits and future opportunities requires modern data management techniques, platforms, and governance policies. Utilizing cloud service providers makes moving, storing, and securing data easier with self-service provisioning and repeatable design patterns to facilitate interoperability.

Data management cloud design patterns

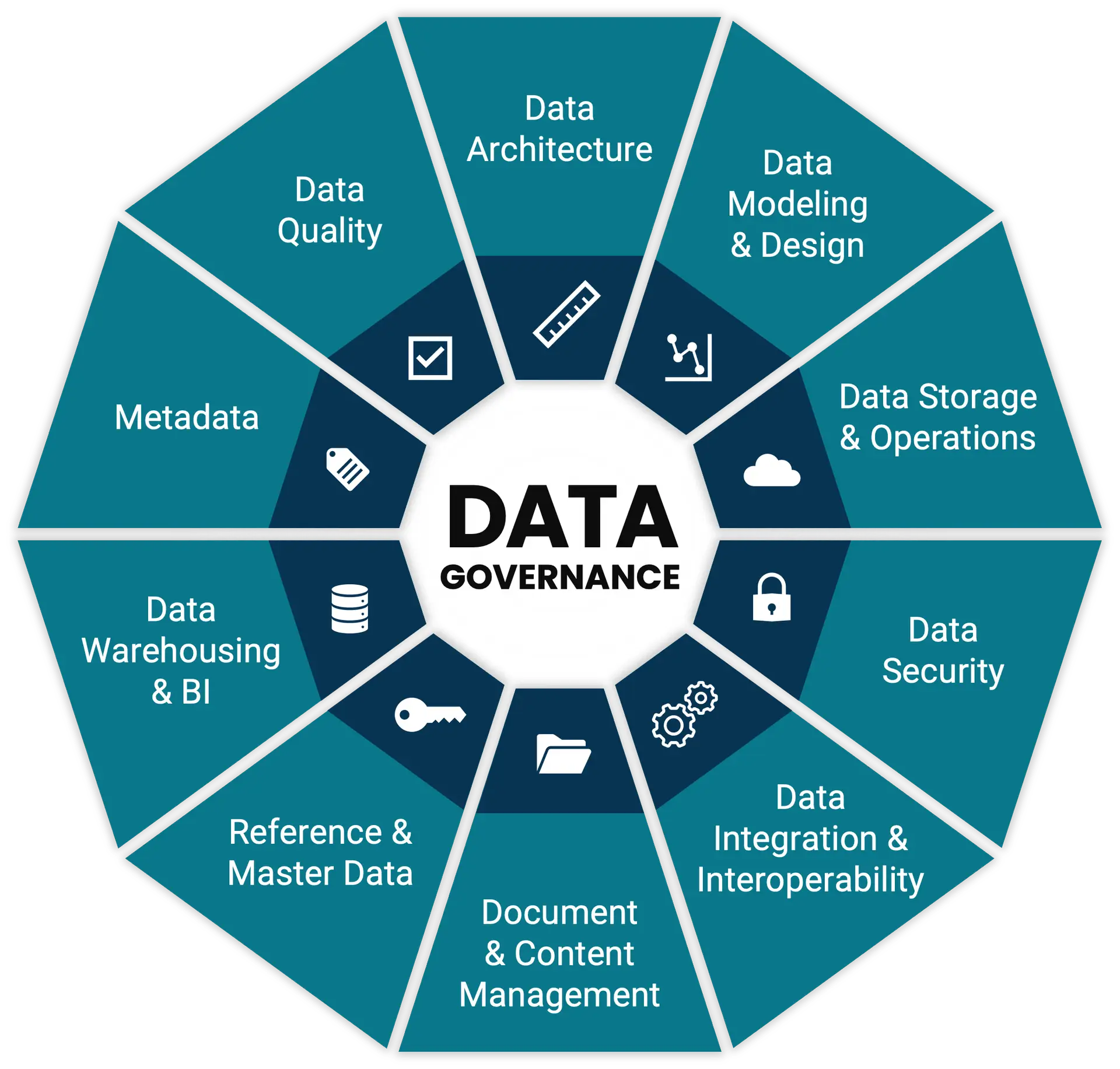

Data-driven organizations require better access, self-service, privacy, integrity, quality, regulatory compliance, governance, security, and interoperability. Better data governance and flexibility are the keys to achieving these data management goals. The fundamentals of data management should start with a data strategy built from organizational goals aligned with the Data Management Body of Knowledge (DMBOK)1.

Data management also requires a framework to make data more discoverable and accessible to authorized users. A framework maximizes the effective use of data to create a competitive advantage and increase the return on investment it takes to collect, store, and process that data into high-quality intelligence.

Our intelligence, defense, and federal customers can achieve greater data management using industry best practices and repeatable cloud design patterns. Data fabric, data hub, and data mesh are examples of cloud-friendly design patterns. The common goals for these design patterns are:

- treat data deliverables as a product

- host the data on a data platform capable of self-service access

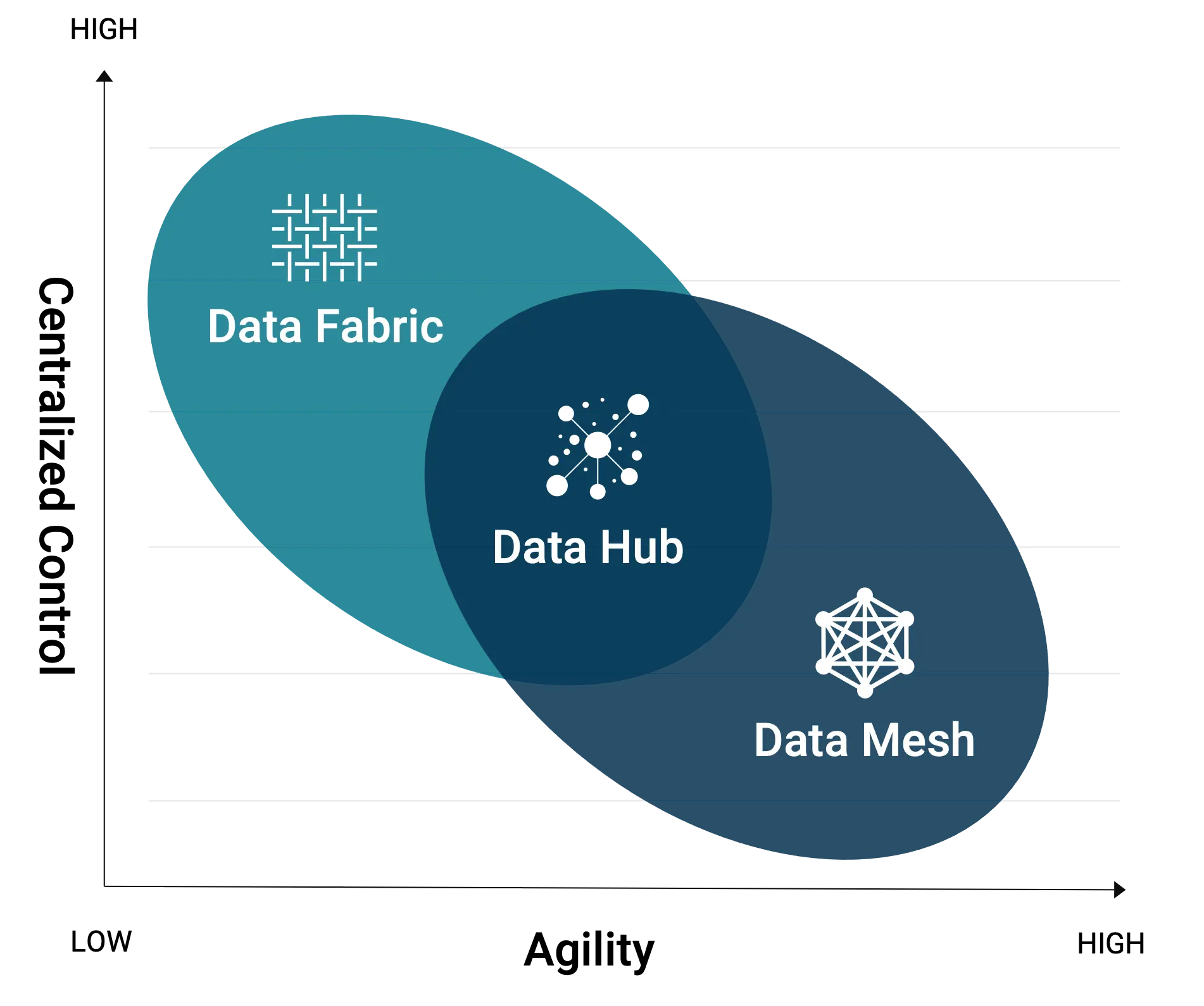

These design patterns do not conflict with central data storage frameworks such as data lake, data lakehouse, or data warehouse, which focus more on how the data is stored, ingested, and consumed than on treating data as a product. The design patterns differ depending on the need for centralized control and agility requirements needed to remain innovative and competitive.

Next is a quick breakdown of the design patterns.

Data Fabric

A data fabric offers the most centralized control and uses technologies such as semantic knowledge graphs, active metadata management, and embedded machine learning2 to manage centralized and decentralized data sources. Data products are developed around enterprise use-cases and departmental requests for project prioritization to centralized data teams that make organization-wide decisions on project adoption and resource allocations.

Data fabrics lower the complexity of data management using AI/ML automation and machine-friendly metadata to enable self-service to data consumers with solutions that are cloud-agnostic, microservices-based, API-driven, interoperable, and elastic3. A data fabric requires a centralized data team for the organization that derives data quality and access requirements from data monitoring dashboards, semantic knowledge graphs (SKGs), and centrally prioritizing departmental requirements requests. The teams are centralized at the organizational headquarters level, and smart data storage metrics enable automatic data tiering to save on storage expenses.

Data fabric platforms are primarily built for hybrid and multi-cloud environments with managed centralized APIs to consume data and lower complexity using a common user interface. Data fabric tends to lack agility when used alone due to failure modes such as overwhelmed centralized data and IT teams and the lack of domain-specific expertise for departmental data in developing data products requested.

Data Hub

A data hub offers a balance between flexibility and centralized control working to make sense of the data, and everything about the data is stored in a metadata-centric database4. A data hub ensures the organization knows what data it has stored using an SKG to encode descriptions about the data—known as “metadata” —in a human and machine-readable way. This design pattern centralizes the metadata repository, tightly coupling with the data catalog and providing the context of the data.

A data hub enables increased data agility when searching for the data by authorized data consumers through this tight coupling of metadata and the data catalog, providing a federated search capability. It provides centrally managed and secure APIs to interact with the data to create data products such as graphs, trend analysis, machine learning (ML), and decision support Artificial Intelligence (AI) products.

The result is a mixture of features and benefits of the data mesh and data fabric to create reusable organizational knowledge built on common data standards. Data hubs integrate with data fabrics to increase agility; data hubs integrate with data meshes to increase federated control and consistency with a tightly coupled data catalog.

Data Mesh

A data mesh offers the greatest flexibility and least rigid centralized control where departments have domain-specific autonomy to make technical changes to their data architecture controlled by federated policies and guardrails. Data mesh requires building data product teams — much like DevSecOps teams did for application modernization — for each departmental domain data product line. Local data authorities have ownership, and data is enrolled into the central data catalog locally and stored in distributed data repositories managed by the individual departments.

The data mesh distribution of data requires data teams to be purpose-built around the products they want to create and disaggregates the data product decisions to departments, increasing flexibility and speed while adding data product team personnel costs. Data teams are decentralized and require a data product owner, data engineers, software engineers, and a product manager, depending on size per departmental domain. Data engineers and the product manager must continuously analyze ongoing changes to data patterns and recommend design and deployment changes5.

Data mesh platforms are primarily built using cloud-native offerings deeply integrated into specific cloud vendor services, which may be interoperable with other providers but require application gateways and refactoring of data product integrations and automation pipelines from one vendor to another.

Where does an organization start?

Data management promotes data maturity, increasing data’s value through enrichment and processing, as well as the development of data products that leaders can use for competitive advantage, increased security, and the efficient management of resources.

The priority of goals that exist on the spectrum between flexibility and centralized control of data management leads organizations to trade-offs with these decision points on which design pattern to start from. Regardless of where you start, the other data management design patterns can co-exist and overlap to provide more agility or more control.

- Start with a data fabric: To tightly enforce compliance standards and centralized data product decisions while allowing distributed multi-cloud data storage, the best approach is to build a data fabric.

- Start with a data hub: If the organizational goal is to centralize control and governance while allowing flexibility for data product teams to create modular open systems architecture (MOSA) applications and systems, the best approach is to build a data hub.

- Start with a data mesh: To achieve ultimate data product creation velocity while allowing for decentralized data product teams and adjustable decoupled distributed control, the best approach is to create a data mesh.

Which cloud design pattern works best for your organization?

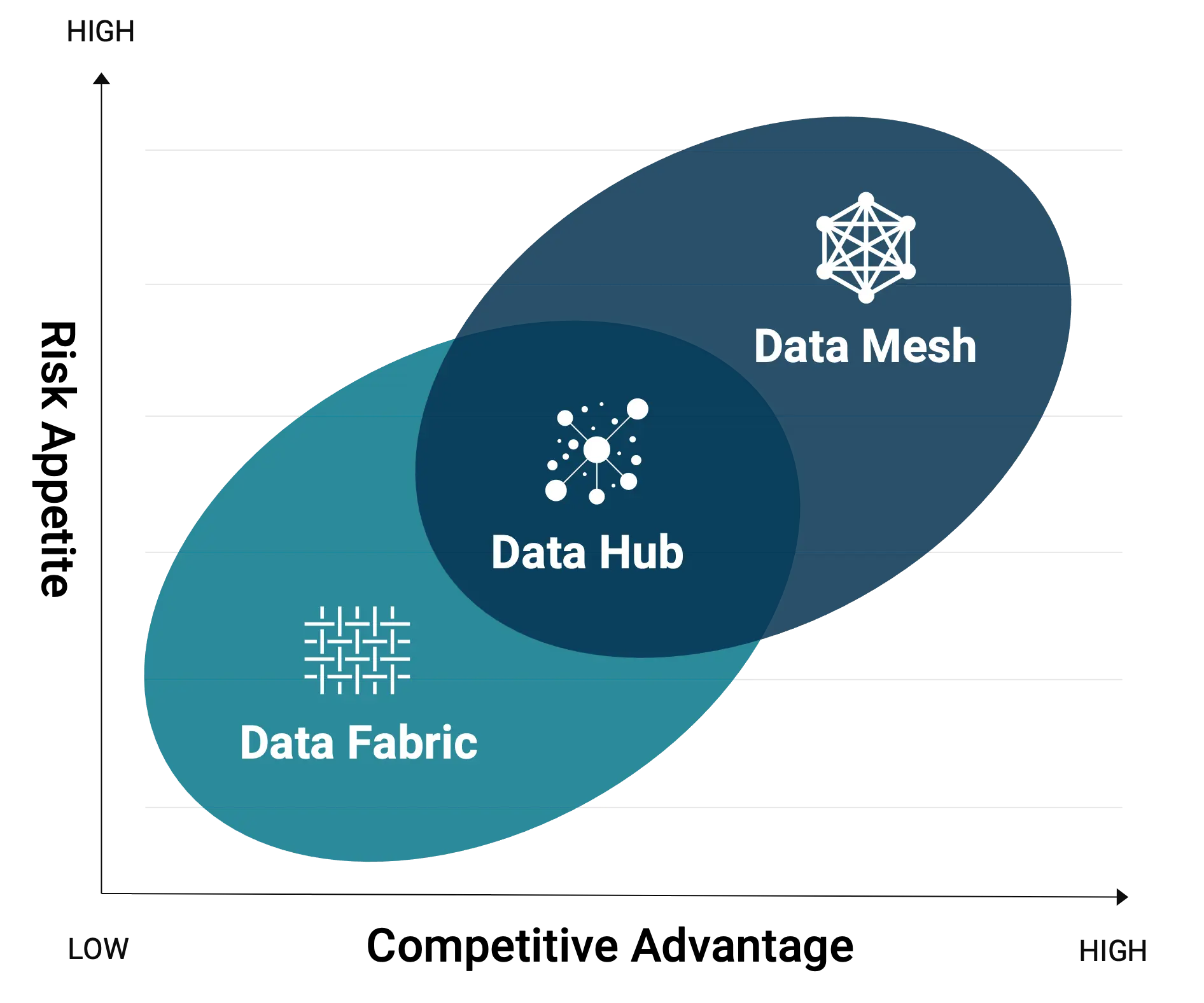

Cloud design patterns are not exclusive to each other and can share a fusion of design to meet business goals and mission competitive advantage while working within the organizational risk appetite.

There are fusions between data mesh and data fabric some have dubbed “meshy-fabric.” And there is the data hub, which sits in the middle between both and can fuse with each. When a data lake or data warehouse is used for centralized data storage and processing, the lake or warehouse becomes another node in a data mesh managed by a departmental team or another repository in the data fabric or data hub managed by a central team.

Always consider your data strategy and risk appetite

Cloud ecosystem data management design patterns exist to solve the main requirements of becoming a data-driven organization to achieve better access, self-service, privacy, integrity, quality, regulatory compliance, governance, security, and interoperability. Design patterns also address common failure-modes for big data platforms that exist at scale when using only data lakes and data warehouses6.

One failure-mode for big data platforms comes from the increase of “ubiquitous data and source proliferation,” leading to a lack of domain-focused expertise to develop data products and analysis. Centralized teams slow down the development of domain-focused data products by not having departmental expertise for a specific data domain; in short, centralized teams lack data domain-focused SMEs.

Another failure-mode for big data systems is the long response time to satisfy the data consumer needs, causing friction with central data teams due to prioritizations of the “organizations’ innovation agenda” and ever-growing data consumer demands. This leads to friction with departmental data consumers competing against each other for change and service request priorities. In the end, the centralized data team may struggle to keep pace with ever-growing demand for data transformations, pipelines, and domain-focused expertise.

The main trade-offs between data fabric, data hub, and data mesh revolve around the risk tolerance that many organizations associate with the variable level of decentralized control and consistency of organizational data to achieve competitive advantage. Data mesh changes the supply-chain of data products to decentralized data product creation using autonomous, domain-focused data teams and, in the words of its inventor Zhamak Dehghani, “with the hope of democratizing data at scale to provide business insights.” The trade-offs are represented in Figure 3 with overlapping design patterns.

The data strategy and risk appetite heavily influence the selection of the appropriate cloud design pattern. It is critical to invest time and resources to develop an organization-wide data strategy based upon the eleven core data management knowledge areas described within the DMBOK7. Organizations should re-evaluate their overall risk appetite in relation to the challenges of remaining competitive before considering which design pattern is right for their unique requirements.

Conclusion

The intent of this article is not to focus on specific cloud service providers (CSP), cloud service offerings (CSO), or licensed data platforms. The intent is to find the right design pattern for your specific organization with the primary focus on facilitating maturing data sources into valuable data products to achieve becoming a data-driven organization.

The solutions presented in future articles will consider compliance frameworks such as FEDRAMP8 and government-specific requirements such as Department of Defense and Intelligence Community policies concerning cloud, DevSecOps, and data management. The goal is to share the knowledge and best industry practices while considering the cultural practices, governance policies, and technical debt influencing organizations undergoing digital transformation.

NT Concepts partners with our customers wherever they are in their digital transformation journey to discover the program goals and find the right mix of technical, personnel, and program management to meet the customer’s goals. Our data and cloud management capabilities are central to creating data readiness to enable AI/ML capabilities. As your organization’s data maturity and data readiness grow, so does the ability to leverage that data and design use-cases around AI.9

The next article in this series on data management focuses on cloud-agnostic solutions for each of these data management design patterns. We will highlight the benefits and trade-offs between a cloud-agnostic approach and a cloud-native, tightly coupled data integration service.

References

1 DAMA International, Body of Knowledge, https://www.dama.org/cpages/body-of-knowledge. Accessed 25 Aug. 2022.

2 Gupta, Ashutosh. “Using Data Fabric Architecture to Modernize Data Integration.” Gartner, 11 May 2021, https://www.gartner.com/smarterwithgartner/data-fabric-architecture-is-key-to-modernizing-data-management-and-integration.

3 Randall, Lakshmi. “Data Fabric vs Data Mesh: 3 Key Differences, How They Help and Proven Benefits.” Informatica, 1 Oct. 2021, https://www.informatica.com/blogs/data-fabric-vs-data-mesh-3-key-differences-how-they-help-and-proven-benefits.html.

4 Hollis, Chuck. “Of Data Fabrics and Data Meshes.” MarkLogic, 28 Feb. 2022, https://www.marklogic.com/blog/data-fabric-data-mesh/.

5 Perlov, Yuval. “Data Fabric vs Data Mesh: Demystifying the Differences.” K2View, 17 Nov. 2021, https://www.k2view.com/blog/data-fabric-vs-data-mesh/.

6 Dehghani, Zhamak. “How to Move beyond a Monolithic Data Lake to a Distributed Data Mesh.” Martinfowler.com, 20 May 2019, https://martinfowler.com/articles/data-monolith-to-mesh.html.

7 Khan, Tanaaz. “What Is the Data Management Body of Knowledge (DMBOK)?” DATAVERSITY, 6 May 2022, https://www.dataversity.net/what-is-the-data-management-body-of-knowledge-dmbok/.

8 “The Federal Risk and Management Program Dashboard.” FEDRAMP, https://marketplace.fedramp.gov/.

9 Panetta, Kasey. “The CIO’s Guide to Artificial Intelligence.” Gartner, 5 Feb. 2019, https://www.gartner.com/smarterwithgartner/the-cios-guide-to-artificial-intelligence.

Nicholas Chadwick

Cloud Migration & Adoption Technical Lead Nick Chadwick is obsessed with creating data-driven government enterprises. With an impressive certification stack (CompTIA A+, Network+, Security+, Cloud+, Cisco, Nutanix, Microsoft, GCP, AWS, and CISSP), Nick is our resident expert on cloud computing, data management, and cybersecurity.